Multi-style Generative Network for Real-time Transfer

Abstract

|

Recent work in style transfer learns a feed-forward generative network to approximate the prior optimization-based approaches,

resulting in real-time performance. However, these methods require training separate networks for different target styles

which greatly limits the scalability. We introduce a Multi-style Generative Network (MSG-Net) with a novel Inspiration Layer,

which retains the functionality of optimization-based approaches and has the fast speed of feed-forward networks.

The proposed Inspiration Layer explicitly matches the feature statistics with the target styles at run time, which

dramatically improves versatility of existing generative network, so that multiple styles can be realized within one network.

The proposed MSG-Net matches image styles at multiple scales and puts the computational burden into the training.

The learned generator is a compact feed-forward network that runs in real-time after training. Comparing to previous work,

the proposed network can achieve fast style transfer with at least comparable quality using a single network.

|

Demo and Authors

Paper and Software

|

ArXiv Preprint [PDF]

Our code is available on Github [Torch], [PyTorch] and [MXNet], including:

- Torch / PyTorch / MXNet implementations of the proposed Inspriration Layer.

- Camera demo and evaluation for the MSG-Net with pre-trained models.

- Training code for MSG-Net with customize styles.

|

Bibtex

@article{zhang2017multistyle,

title={Multi-style Generative Network for Real-time Transfer},

author={Zhang, Hang and Dana, Kristin},

journal={arXiv preprint arXiv:1703.06953},

year={2017}

}

|

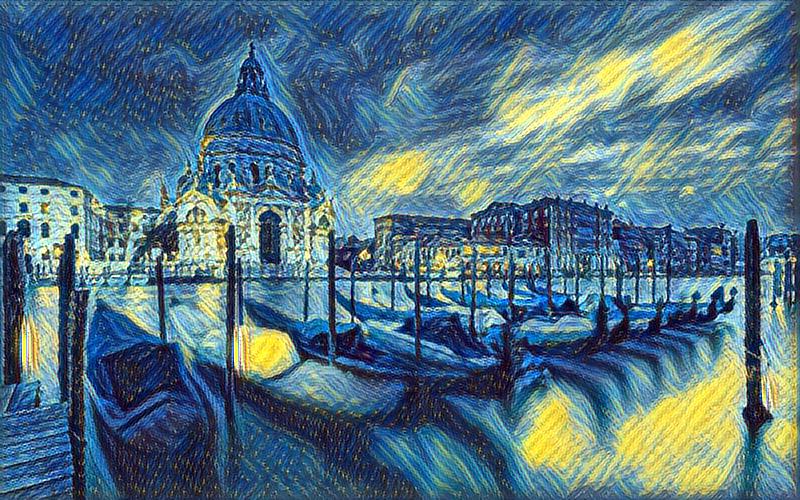

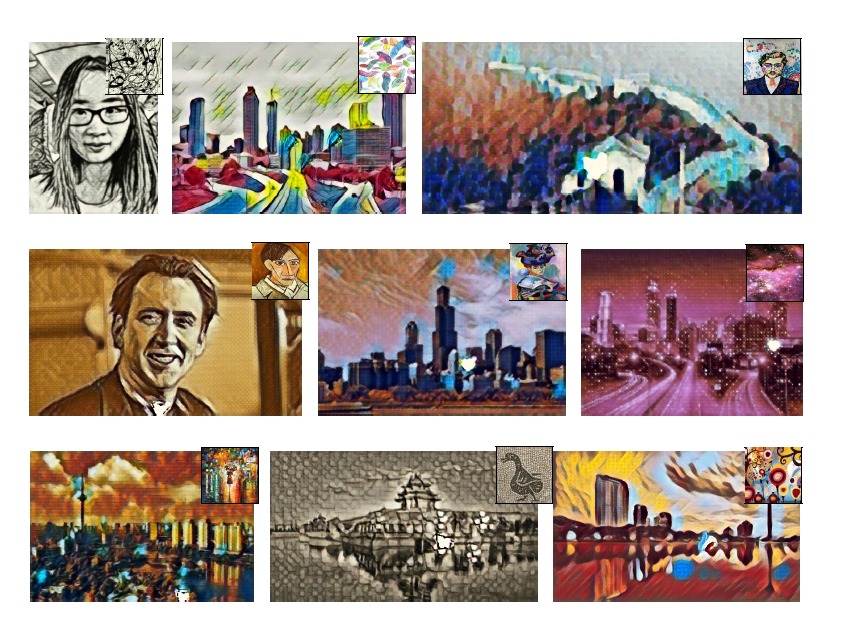

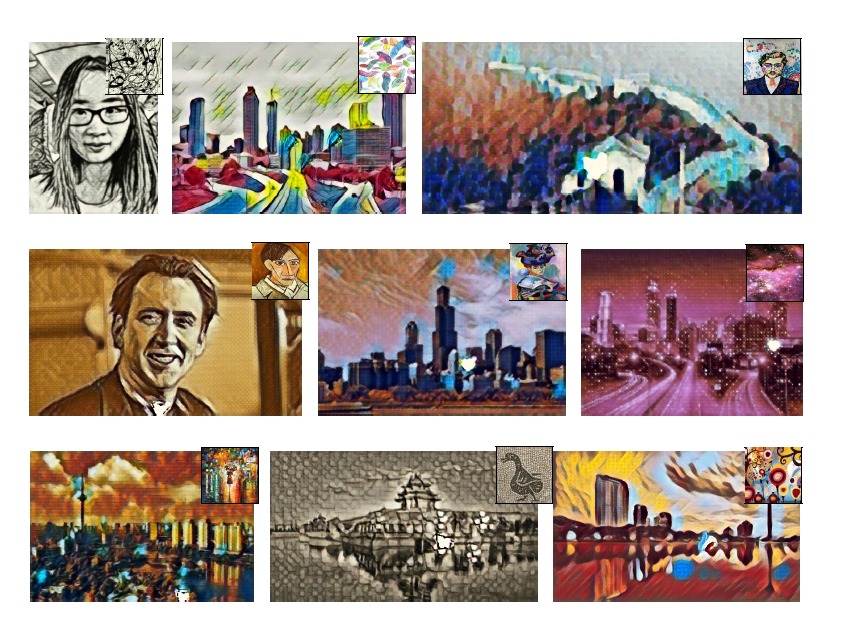

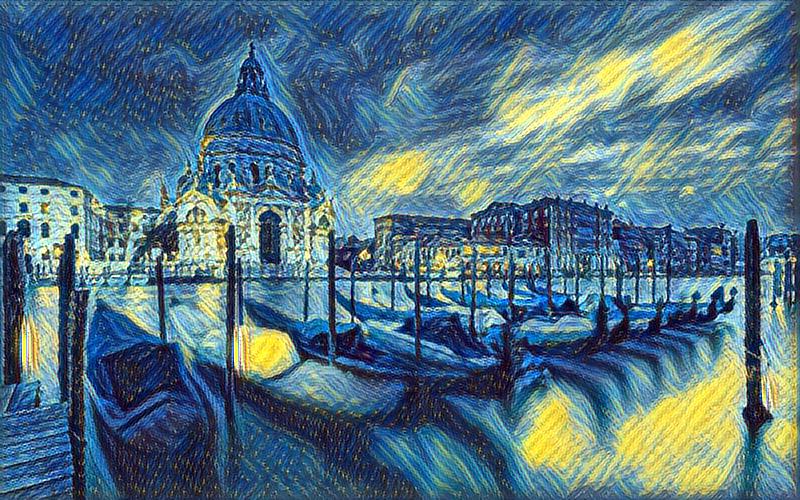

Example Results

More Examples

Acknowledgment

This work was supported by National Science Foundation

award IIS-1421134. A GPU used for this research was

donated by the NVIDIA Corporation.

Written on March 20, 2017